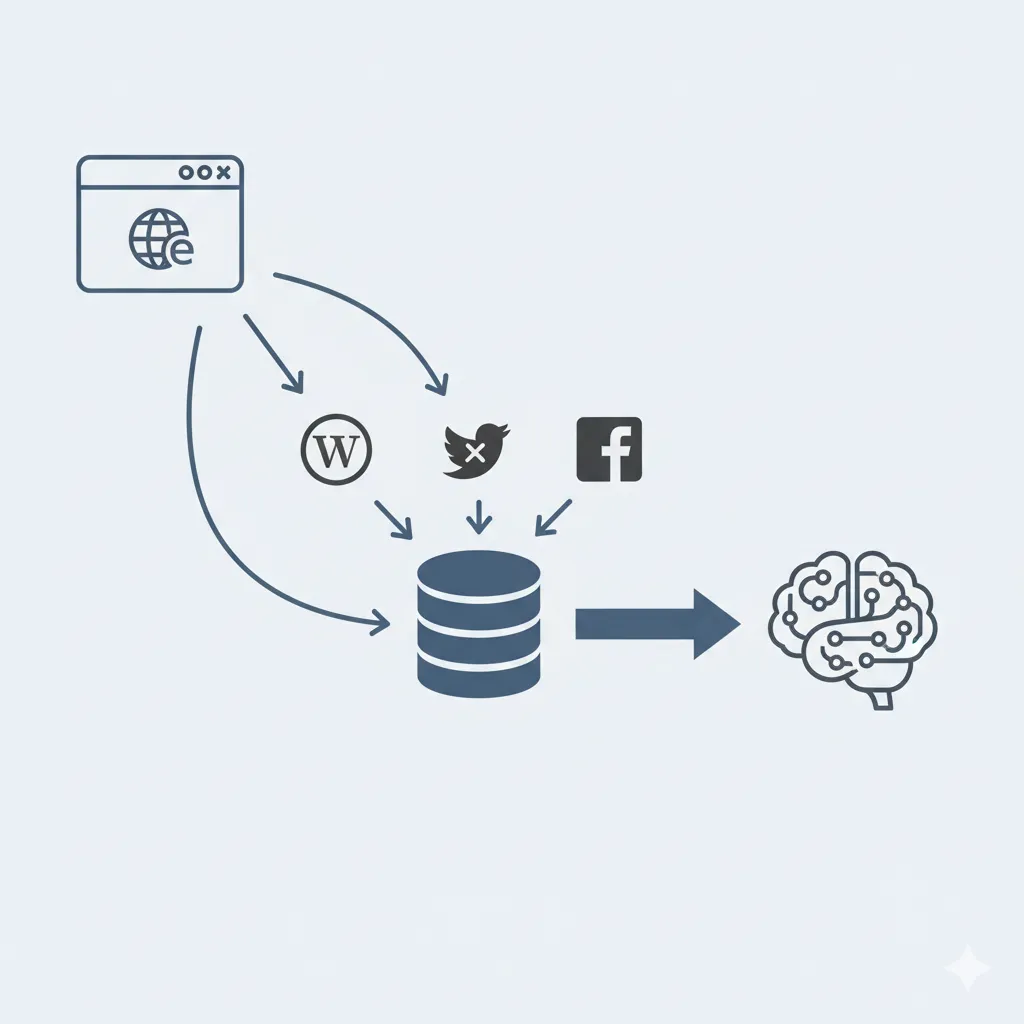

AI models need diverse, high-quality data to stay effective. A major issue arises when they train on content generated by other AI systems. This leads to steady performance decline. Outputs become less varied, more error-filled, and narrower. Fresh data from real human sources counters this decline. Regular collection from the current web keeps models accurate and capable.

What Causes Model Collapse?

Model collapse describes the progressive degradation in AI performance. It occurs mainly through recursive training on synthetic outputs. Each new model inherits flaws from the previous one. Diversity in language, syntax, and meaning decreases over iterations. Rare patterns disappear first. Errors build up and become fixed.

Studies show this happens in language models, image generators, and other generative systems. When real data gets replaced by synthetic versions, performance drops noticeably. Tasks needing creativity or handling edge cases suffer most. Without correction, the process accelerates.

The Rise of Data Pollution Online

The web used to hold mostly human-created text—articles, posts, discussions. This variety supported strong early AI training. Now, synthetic content spreads quickly. Estimates from 2025 indicate a large share of new web pages includes AI-generated material. Training datasets pick this up during crawls. Feedback loops form. Models shift toward generic patterns and lose touch with real human expression.

This affects practical applications. Summarization tools miss subtle details. Specialized fields like law or medicine overlook uncommon but important information. Overall progress in AI slows if contaminated data keeps circulating.

Limits of Synthetic Data Alone

Synthetic data allows quick scaling and control. It fails as the main long-term source. Models trained mostly on it hit limits fast. They overemphasize frequent patterns. Outliers fade. Bias intensifies. Output variety shrinks.

Research from recent years confirms:

- Replacing real data with synthetic leads to collapse.

- Accumulating synthetic alongside real data can stabilize performance.

- Pure recursive loops cause irreversible quality loss.

Even small synthetic portions start issues if not offset by fresh human inputs.

For reliable large-scale collection of current human-generated content, residential proxies make a difference. They use real home IP addresses to route requests. This makes scraping look like normal browsing. It helps avoid blocks, rate limits, and detection. For residential proxies with over 30 million IPs, city-level targeting, dynamic rotation, and strong anonymity suited to data gathering, see https://stableproxy.com/en/proxies/residential

Why Fresh Web Data Matters Most

Ongoing access to live human content anchors models to reality. It captures changes in language, events, and perspectives. Regular updates prevent drift from true distributions. They restore lost details and reduce error buildup.

This approach supports better generalization. Models handle uncommon situations more reliably. It also limits bias reinforcement from homogenized synthetic sources.

Web Scraping as the Direct Remedy

Web scraping gathers data straight from live sites. It provides up-to-date, varied inputs in large volumes. This refreshes training sets and breaks uniformity cycles. Focused crawls target valuable areas like forums, news, or reviews.

Combined with effective proxies, it scales without frequent interruptions. It enables continual fine-tuning. The outcome is models that remain grounded and useful over time.

Key Advantages of Residential Proxies

These proxies route through genuine residential connections. Requests appear as everyday user activity.

Benefits include:

- High anonymity with hidden original IP

- Low chance of detection by sites

- Precise geo-targeting by country, region, or city

- Options for dynamic IP changes or static assignments

- Support for high-volume operations

They work well for scraping, market monitoring, SEO tracking, and similar tasks. Coverage spans major locations like the US, UK, Germany, and others.

Path to Stronger AI Systems

The data crisis requires focused steps. Rely on current, human-sourced information to sustain quality. Web scraping, backed by suitable tools, supplies what synthetic methods lack. Consistent real inputs keep AI aligned with the world. This maintains capability and broad usefulness in the years ahead.